How can real scientific performance be measured?

Sep

16

How can you tell which researchers will be active and successful in the near future? As well as allocating financial resources, this is as important for future researchers as it is for higher education institutions. The so-called science metrics used so far do not give a complete picture. Dr. Balázs Győrffy and his research team have developed software based on a new methodology that allows real-time and objective measurement.

Software has been developed to measure scientific performance in a more optimal way than the usual methods. Why did you think this was important?

Like it or not, scientific performance has to be measured somehow in order to be improved. We must also strive to ensure that the amount of money available to fund scientific research is distributed efficiently.

There are several platforms that measure scientific performance today, for example, Google Scholar, Scopus, Web of Science, OpenAlex, ResearchGate, etc. They all show you how many articles someone has, what their H-index is, what their total citations are, etc. They may add a plus, for example, Google for the i10 index. There are subtle differences between them, but what they all have in common is that they all use aggregated indicators. Then, for example, in Google Scholar, the user clicks on any university, which ranks the researchers according to the indicators, produces a ranking. But this is grossly unfair in many ways! A young researcher, say 30-35 years old, cannot be expected to perform as well as a 60-65 year old. The other major problem is that these systems do not take into account disciplinary differences. The number of publications in mathematics and physics, for example, is completely different. After the marked difference between age and disciplines, the third problem is that some people are very active but then retire. Someone may start with a dynamic career but then "rest on their laurels."

- the established indicators are not able to track how active the person is at the moment.

The giants of the past distort the current perception. The fourth problem is with authorship. There are disciplines - mathematics or economics, for example - where it does not matter who is named as the first author, and it is not common for more than three authors to be listed in an article. However, in medicine, biology, physics or chemistry, it does matter who is first and last among the authors. Typically, a middle author has not contributed in the same way to a given publication, while the first author has done most of the work and the last author is usually the fifth author who oversaw the implementation of the research. Many measurement methods cannot distinguish between authors in this respect. Thus, someone who is often typically a middle author will be treated as a very active researcher by the established systems. This is not a problem as long as a PhD student does not select a person as a supervisor on this basis, because with a less active researcher, he is likely to waste his time because he will end up with no articles of his own.

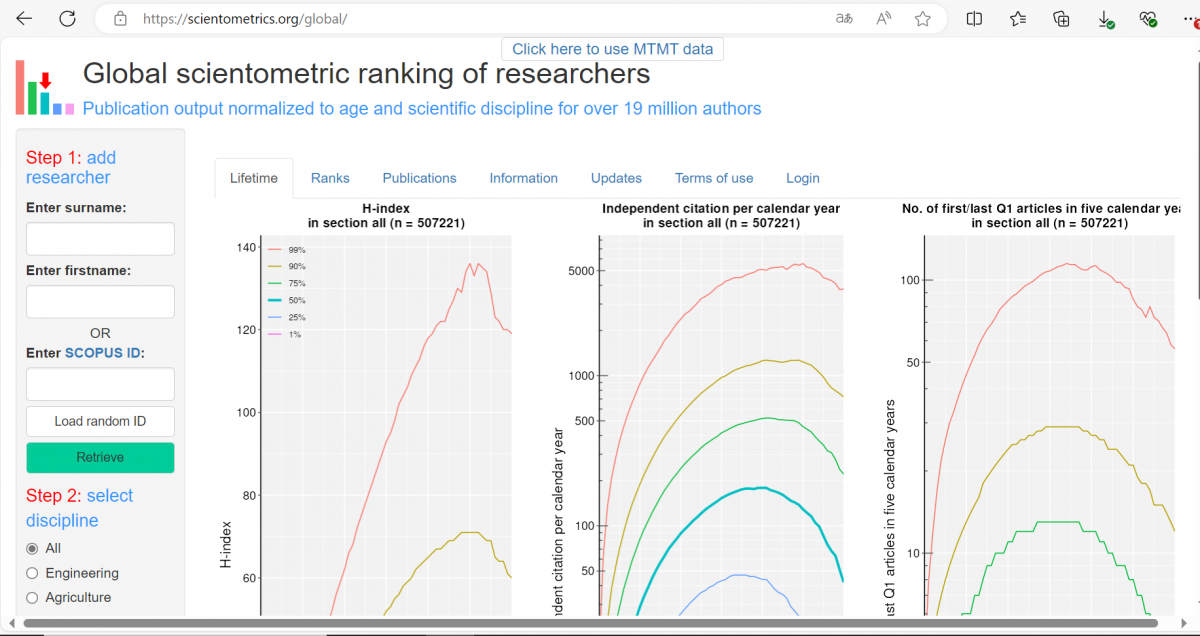

Scientometrics was created to address these injustices, with two versions, one specifically for Hungary and one with a global database.

How did you set out to build a global system?

We downloaded the data of 500,000 researchers worldwide and calculated their research life: we treated their first publication as their birth, and then for each year, for each researcher, we calculated their H-index, how many articles they had published in the last five years in which they were the lead author, and their annual citation. This created a normalised reference database. When a new researcher enters the system, let's say a 35-year-old chemist, we look at how that researcher compares to the performance of all chemists over 35 at age 35. In this way, we take into account characteristics of both age and discipline, as well as authorship positions.

The good thing about this system, in our opinion, is that it is much fairer than the previous system of browsing aggregated figures. The downside is that a large proportion of people will not be satisfied with their own performance because they will not be in the top category.

I suppose it's a challenge for researchers used to measuring past performance to (also) measure present performance.

The system is complex, which is because we have tried to ensure that it cannot be easily manipulated. The experience is that, for example, the H-index itself can be easily increased by putting some targeted self-citation in one's own articles. It should be noted that there is also a fourth parameter built into our system, and that is the number of articles with high citation rates. After all, it's one thing to write a lot of articles, but what if you publish fewer but they are very good articles. We have tried to address this by introducing a dedicated indicator.

The system is also flexible to deal with the effects of the researcher having a child. At the moment these data are automatically entered into the system from the MTMT.

How many people are already using it?

Currently, there are 40,000 people registered in the MTMT in Hungary, with around 1,500 applications per year. Our software is used by around 5-600 people per week in Hungary, which means that 5% of researchers look at their own or their colleagues' data and results on a weekly basis.

If I were a PhD student, I would want to be in the company of researchers who have good indicators at a global level.

To search for researchers, we can use data that is publicly available, including the author's name, email address, affiliation. If someone looks up a researcher, or perhaps themselves, and gets a very bad result, the typical reason is that they are listed under the wrong name in Scopus. I didn't know myself until we set up this system that I had three "instances" in Scopus (my articles were split between these three virtual researchers) and I had to write to them to have them merged.

Why did you set up this system, what motivated you?

The reason is simple. For years I have been judging for the OTKA, and despite my best efforts, I could not determine which applicant deserved the funding, it was impossible to compare them, because they were all in different fields, different ages, and their articles looked different. By the way, precisely because of these - and we have a published study on this - the points awarded by the OTKA reviewers show something quite shockingly low correlation with whether or not a publication is subsequently produced from the research.

Are you planning some kind of training course for UP researchers, or even Hungarian researchers, to teach them how to properly manage these systems?

I have already taught such a course at Semmelweis and I have proposed it to the UP management as well.

It is important to understand that our system does not determine whether a researcher is "excellent" in terms of his/her life's work, but rather how active and productive he/she is in the present.

This is particularly useful for proposal evaluators and agile PhD students, as well as for those who want to bring together researchers who are currently active in a particular field.

As I understand it, Scientometrics goes beyond the career progression of researchers.

It is also clear that good research indicators have a further impact on institutions and are reflected in their rankings. And rankings are increasingly determining which institutions talented students apply to.

According to our study published a year ago, the number of highly cited research papers currently has the greatest impact on the ranking of a higher education institution. Which is understandable: rankings cannot really measure whether, for example, classical philology is taught well. It is also intangible how satisfied students are, since anyone studying at the university in question will be satisfied. What can be measured is the opinion that outsiders have of the institution and objectively measurable academic performance. The latter can be monitored by the Scientometrics system.